Scams make up the majority of illicit activity in the cryptocurrency sector. Findings from the Federal Bureau of Investigation (FBI) show that U.S. citizens lost $9.3 billion to crypto scams last year.

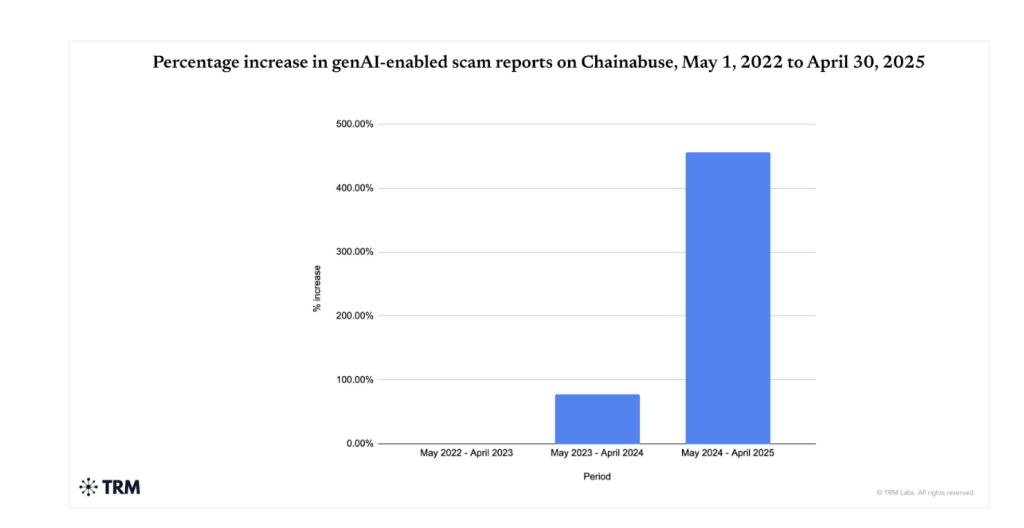

The rise of artificial intelligence (AI) has only made this worse. According to blockchain analytics firm TRM Labs, there was a 456% increase in AI-facilitated scams in 2024 compared to previous years.

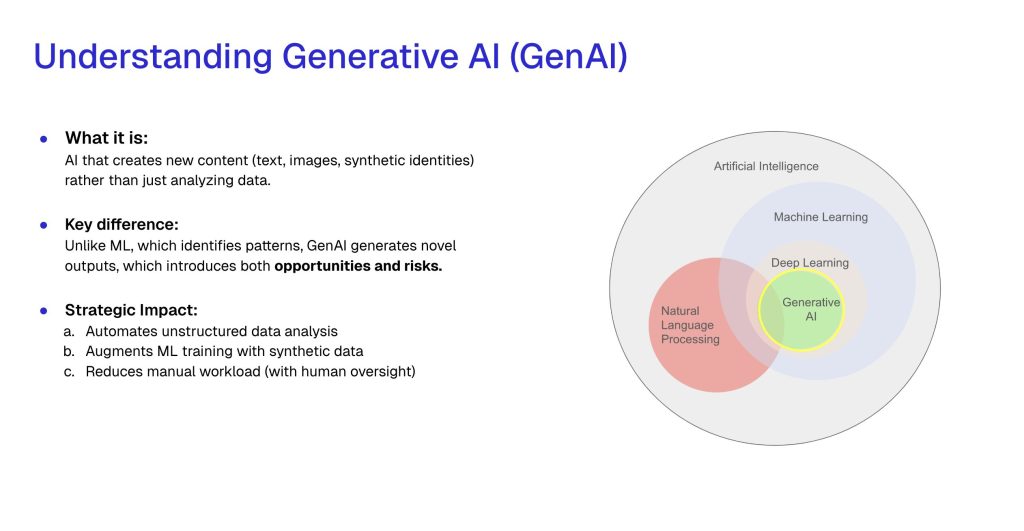

As generative AI (GenAI) advances, bad actors are now able to deploy sophisticated chatbots, deepfake videos, cloned voices, and automated networks of scam tokens at a scale never seen before. As a result, crypto fraud is no longer a human-driven operation, but rather algorithmic, fast, adaptive, and increasingly convincing.

Scams Moving at Lightning Speed

Ari Redbord, global head of policy and government affairs at TRM Labs, told Cryptonews that generative models are being used to launch thousands of scams simultaneously. “We are seeing a criminal ecosystem that is smarter, faster, and infinitely scalable,” he said.

Redbord explained that GenAI models can tune into a victim’s language, location, and digital footprint. For instance, he noted that in ransomware, AI is being used to select victims most likely to pay, draft ransom demands, and automate negotiation chats.

In social engineering, Redbord mentioned that deepfake voices and videos are being used to defraud companies and people in “executive impersonation” and “family emergency” scams.

Finally, on-chain scams involving AI tools writing scripts can move funds across hundreds of wallets within seconds, laundering at a pace no human could possibly match.

AI-Powered Defenses

The crypto industry is turning to AI-powered defenses to fight back against these scams. Blockchain analytics firms, cybersecurity companies, exchanges, and academic researchers are now building machine-learning systems designed to detect, flag, and mitigate fraud long before victims lose funds.

For example, Redbord stated that artificial intelligence is built into every layer of TRM Labs’ blockchain intelligence platform. The firm uses machine learning to process trillions of data points across more than 40 blockchain networks. This allows TRM Labs to map wallet networks, identify typologies, and surface anomalous behavior that indicates potential illicit activity.

“These systems don’t just detect patterns—they learn them. As the data changes, so do the models, adapting to the dynamic reality of crypto markets,” Redbord commented.

This lets TRM Labs see what human investigators might otherwise miss—thousands of small, seemingly unrelated transactions forming the signature of a scam, laundering network, or ransomware campaign.

AI risk platform Sardine is taking a similar approach. The security company was founded in 2020, a time when prominent crypto scams were just beginning to take place.

Alex Kushnir, Sardine’s head of commercial development, told Cryptonews that the company’s AI-fraud detection consists of three layers.

“Data is core to everything we do. We capture deep signals behind every user session that happens on financial platforms like crypto exchanges—like device attributes, whether apps are tampered, or how a user is behaving. Secondly, we tap a wide network of trusted data providers for any user inputs. Finally, we use our consortium data—which may be the most important for fighting fraud—where companies can share data relating to bad actors with other companies.”

Kushnir added that Sardine uses a real-time risk engine to act on each of the indicators mentioned above to combat scams as they happen.

Kushnir also pointed out that today, agentic AI and large language models (LLMs) are used mainly for automation and efficiency rather than real-time fraud detection.

“Rather than hard-code fraud detection rules, now anyone can just type out what they want a rule to evaluate, and an AI agent will build it, test it, and deploy that rule for them if it meets their requirements. The AI agents can even proactively recommend rules based on emerging patterns. But when it comes to predicting risk, machine learning is still the gold standard,” he said.

AI vs. AI Use Cases

These tools are already proving to be effective.

Matt Vega, Sardine’s chief of staff, told Cryptonews that once Sardine detects a pattern, the firm’s AI performs a deep analysis to find trend recommendations to stop an attack vector from occurring.

“This would normally take a human a day to complete, but using AI takes seconds,” he said.

For example, Vega explained that Sardine works closely with leading crypto exchanges to flag unusual user behavior. User transactions are run through Sardine’s decision platform, and AI analysis helps determine the outcome of these transactions, giving exchanges advanced notice.

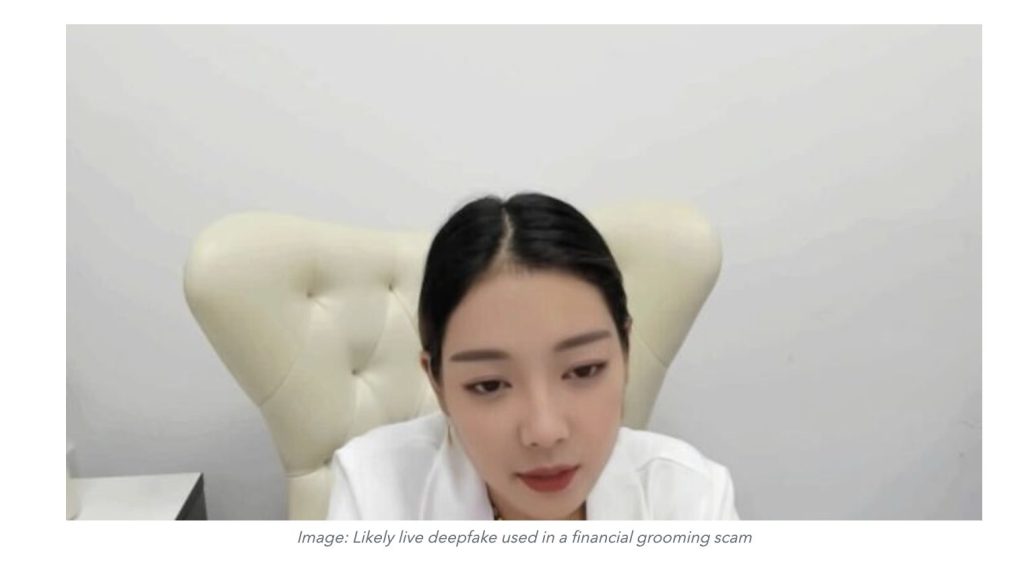

A TRM Labs blog post further explains that in May, the firm witnessed a live deepfake during a video call with a likely financial grooming scammer. This type of scammer establishes a long-term, trusting, and often emotional or romantic relationship with a victim to gain access to their money.

“We suspected this scammer was using deepfake technology due to the person’s unnatural-looking hairline,” Redbord explained. “AI detection tools enabled us to corroborate our assessment that the image was likely AI-generated.”

Although TRM Labs was successful, this specific scam and others related to it have stolen about $60 million from unknowing victims.

Cybersecurity company Kidas is also using AI to detect and prevent scams. Ron Kerbs, founder and CEO of Kidas, told Cryptonews that as AI-powered scams have increased, Kidas’ proprietary models can now analyze content, behavior, and audio-visual inconsistencies in real-time to identify deepfakes and LLM-crafted phishing at the point of interaction.

“This allows for instant risk scoring and real-time interdiction, which is the only way to counter automated, scaled fraud operations,” Kerbs said.

Kerbs added that just this past week, Kidas’ tool successfully intercepted two distinct, crypto-scam attempts in Discord.

“This rapid identification showcases the tool’s crucial real-time behavioral analytics capability, effectively preventing the compromise of user accounts and potential financial loss,” he said.

Protecting Against AI-Powered Scams

While it’s clear that AI-powered tools are being used to detect and prevent sophisticated scams, these attacks will continue to increase.

“AI is lowering the barrier to entry for sophisticated crime, making these scams highly scalable and personalized, so they will certainly gain more traction,” Kerbs remarked.

Kerbs believes that semi-autonomous malicious AI agents will soon be able to orchestrate entire attack campaigns, requiring minimal human oversight with untraceable voice-to-voice deepfake impersonation in live calls.

Although alarming, Vega pointed out that there are specific steps users can take to prevent falling victim to such scams.

For instance, he explained that many attack vectors are spoofing websites, which users will eventually visit and then click on fake links.

“Users should look for Greek alphabet letters on websites. American multinational technology company Apple recently fell victim to this, as an attacker created a fake website using a Greek “A” letter in Apple. Users should also stay away from sponsored links and pay attention to URLs.”

In addition, companies like Sardine and TRM Labs are working closely with regulators to determine how to build guardrails that use AI to mitigate the risk of AI-powered scams.

“We’re building systems that give law enforcement and compliance professionals the same speed, scale, and reach that criminals now have—from detecting real-time anomalies to identifying coordinated cross-chain laundering. AI is allowing us to move risk management from something reactive to something predictive,” Redbord stated.